Article by Penny Rafferty in Berlin // Tuesday, Dec. 26, 2017

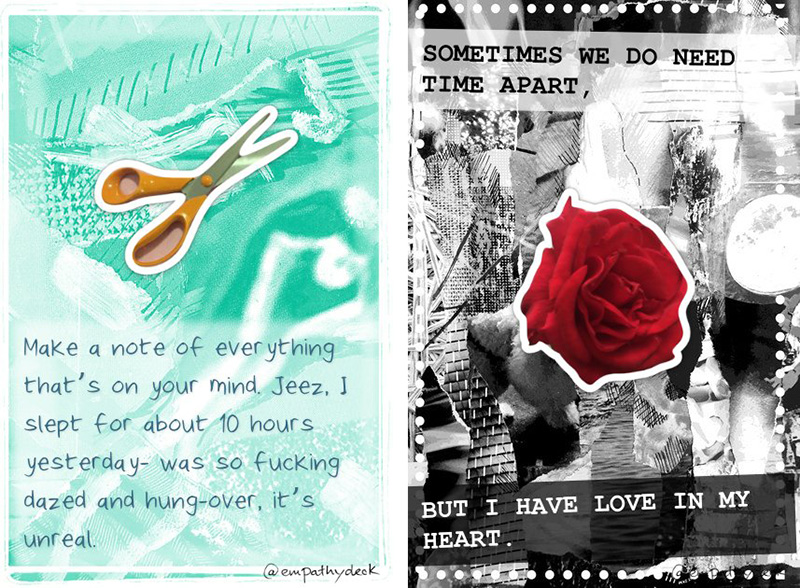

Erica Scourti‘s practice mixes performance art, autobiographical discourse, and code. Her ‘Empathy Deck’ is a bot with feelings: it responds to Twitter followers with custom-made cards specially designed by the Greek artist to offer empathetic reactions. The cards have a cut and paste cuteness to them—collaged ice creams, amethyst stones, Love Heart sweets and moon cycles are some of the frequent motifs. At times, a female face appears on the cards: one assumes this to be Scourti herself. The bot itself pulls parts from the artist’s own diary, as well as its own programmed language from self-help books to respond to it followers’ tweets. It sends phrases like “The truth needs no defence either way” or “we have tried all of the above….many times, with little success. I am still fearless and that is exactly what he hated” to its followers, hoping to cheer them up and provide support. In a world of trolls, dark web creations and social media pyscho-hacks, a well-meaning bot is the ideal respite. But what does it mean when we start to automate empathy? Berlin Art Link caught up with Scourti to find out.

Erica Scourti: ‘Empathy Deck’ installation view at Group Therapy, UNSW Galleries, Sydney, 2016 // Courtesy of the artist and UNSW Galleries, Sydney

Penny Rafferty: In light of the featured topic ‘Automation’, I instantly thought of your ‘Empathy Deck’ bot. Do you think it’s fair to look at it as an automated procedure of self-help?

Erica Scourti: Yeah. Or maybe it’s more like guidance, in the same way that astrology, tarot and other divinatory practices offer somewhat cryptic support towards self-knowledge rather than just outright therapy.

It’s interesting you say self-help, not just help, since its primary function is to respond to other people, its followers, not me; but actually, I had been very depressed around the time of its inception (see Negative Docs video for more on that topic) so perhaps by sharing my personal problems, humiliations and anxieties, the bot reflects a desire to alleviate the feelings of aloneness that depression creates.

PR: In what sense?

ES: Well, when others respond, you feel less alone, and the gesture of sharing your own difficulties can often help others (as long as you’re not sharing shocking, disturbing or otherwise triggering material with no warning). The bot acted as a sort of automated advisor, looking for ‘common ground’ between its followers’ tweets and my own diaries. This anecdote or feeling created a moment of connection and humour because of the inherent silliness of it being an automated process, earnestly responding to its human followers, and the fact that its responses are based on a real person’s feelings, experiences and life. Just having distance from the original tweeted sentiment acts therapeutically in some way. It reframes it and provides a different perspective. I set out with the intention to be a sort of always-on, proxy friend.

PR: But in essence, it is an artwork, right?

ES: Yes. It’s an artwork first and foremost and while it definitely both investigates and enacts gestures of automated friendship, it’s not a therapeutic tool. As one pinned tweet says; it equally explores performative and automated autobiographical gestures through an intimate public sphere of direct interaction. Like a scaled up Mail Art for the Twitter age.

Erica Scourti: ‘Empathy Deck’, 2016-18, sample card // Courtesy the artist

PR: Are you spiritually-inclined normally?

ES: Arrghhh. I kind of hate the word spiritual. It’s a sort of marketing/lifestyle shorthand for the commodification of self-care, usually with a large dose of cultural appropriation of Eastern religion thrown in. But that said, I do meditate and I’ve read a lot of what could be called spiritual texts (e.g. the ‘Dhammapada’, ‘Tao Te Ching’, ‘Dark Night of the Soul’), again mostly during times I have struggled with my mental health, and felt I needed some guidance. However, I prefer to read them as ethical handbooks that offer ‘ways of living’ in relation to community, in a way that texts by Audre Lorde and Sara Ahmed do, too; what is often overlooked in discussions of faith-based texts is the focus on collective action, care and ethics, a million miles from the mostly self-centred, apolitical pursuit of happiness ‘for me, by me’ that the spiritual marketplace fosters.

PR: In some ways we could call the bot a charlatan; in others we could see it as another unidentifiable emotional generator. In a sense, the latter is almost the way most people understand tarot, the guidance comes from another realm anyway.

ES: [laughs] I love the idea of the bot as a charlatan! Because this has always been a fear, from any form of guidance or support that couldn’t be scientifically ‘proven’—never mind that that’s not the reason people turn to things like tarot, psychics or astrology in the first place, or historically, spiritual mediums (who were often accused of being charlatans). As Karen Gregory says: “rather than a doctrine that must be believed in, Tarot is best thought of as speculative, experiential practice through which one opens oneself up to the dynamism of matter and energy.” I wanted the Deck to embrace this chance-based format that, though automated—isn’t astrology automated, or at least totally formulaic too?—could give some insight into precisely the personal interpretation of it.

In a way it is producing free-floating intensities of feeling, reflecting the affective economy that prizes heightened states of emotion for their supposed authenticity and humanity.

PR: The bot also contains parts of you, though. Personal diary entries. I wonder if this makes the bot a prosthetic limb rather than its own entity? A sort of confessional glitch?

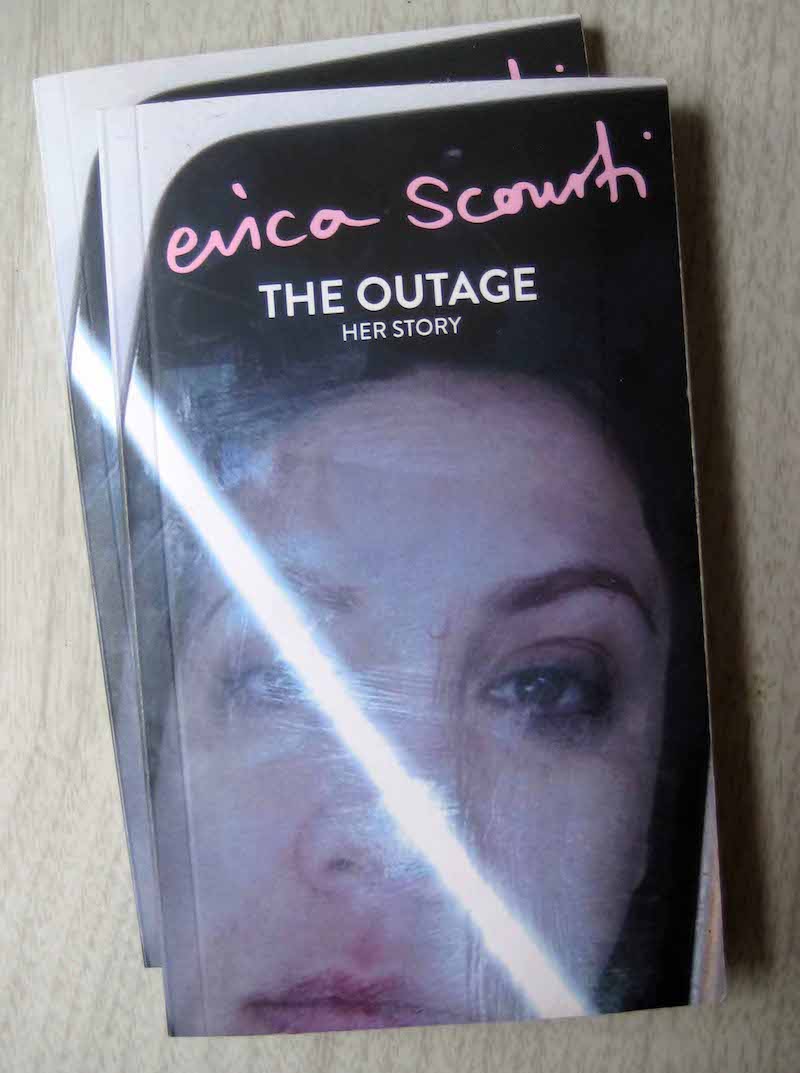

ES: It’s a proxy of me, a stand-in so to speak, since my diary is the primary text (300,000 words of it!) with the secondary texts being other self-help, advice, and self-knowledge literature. This means it is always using something I originally wrote as an impetus-cum-diary, even if slightly reconstituted. Unlike most bots, a lot of it is quoted verbatim and, of course, I can’t control what comes out (beyond the huge kill list of words it never says and never responds to, which I call its ‘empathetic framework’). It’s also another sort of outsourced autobiography, picking up where my ghostwritten memoir ‘The Outage’ left off, though that was written by a human, based only on what they could find about me online and in a folder of ‘intimate data’: YouTube, Amazon, URL, Google search histories and recommended, and a bunch of Facebook messages and emails.

Erica Scourti: ‘The Outage’, paperback book, Banner Repeater Paperbacks, 2014 // Courtesy of the artist

PR: Do you think caring and labour can be ever be automated beyond little pick-me-up messages?

ES: Well, on a practical level, the automation of work associated with supposedly ineffable human qualities like empathy, affection and personality is already a big business, from robots for the elderly to the many automated therapy bots being tested: x2ai’s ‘Tess’, ‘woebot’, to name just a couple; even the automation of personality in software like email assistant ‘Crystal’, who personality tests both you and your desired recipient before suggesting what tone to adopt, points to a horizon of automating emotional labour, a type of labour that has, not coincidentally, often been gendered female.

PR: But isn’t it becoming a gendered concept more than ever?

ES: Yes, with the flurry of female digital assistants that are available on the market—’Cortana’, ‘Siri’, ‘Alexa’—also offering an aspect of automated caring labour, as Helen Hester and others have argued, by replacing the traditional role occupied by the wife, mum or secretary. This suggests that it is: so the questions we need to be considering are what effect this will have on the people, mostly women, who have traditionally worked in care professions? And what the political implications of being able to outsource crucial mental health services to a bot are? Most likely, it will be the less privileged who get the automated version, which is already the case in the UK, where CBT—a form of therapy that has been criticized for its one-size-fits-all, one might say robotic nature—is readily prescribed by the NHS above other, more time-consuming, personalised approaches.

Erica Scourti: ‘Empathy Deck’, 2016–18, sample card // Courtesy the artist

PR: It’s interesting you started out with wanting a bot to create empathy because in a way most sci-fi narratives always exclude the machine’s ability to have empathy or sustain human empathy. What were your intentions beyond this?

ES: I’m fascinated by the role that this apparently crucial human quality plays in futuristic and sci-fi narratives. It’s this lack, apparently, that allows machines to carry out self-serving, coldly calculating and even cruel actions without remorse. Given the fact that most corporations—and on the strength of the #metoo allegations—most men, could be seen to act in a similarly unempathetic way, maybe now is the time to worry more about the inhumanity of many humans, and the oppressive racial and patriarchal structures they uphold.

PR: And your bot?

ES: I wanted to see whether it was possible to create moments of ‘genuine’ connection (quotes because everything is mediated, even genuineness) and one of my favourite aspects of the project is seeing others interact with it: saying ‘you ok hon?’ when it’s come out with something incomprehensible or especially sad, or seeing people retweet its cards. It makes me wonder whether it’s the serendipity of the sentiment, and the fact that they know it’s not staged, affected or calculated. Unlike most people on social media, you definitely couldn’t accuse a bot of trying to flatter or manipulate you.