by William Kherbek // Dec. 20, 2022

In the flood of writing and pondering about the advance of artificial intelligence-enabled art, the word “uncanny” keeps popping up. Writers and artists frequently describe the warped, hyper-real/hyper-cursed images programs such as DALL-E and Midjourney produce as possessed of the kind of eerie life-but-not-life effects discussed in Sigmund Freud’s 1919 essay ‘The Uncanny.’ Freud was building on an earlier essay by another German pioneer of psychology, Ernst Jentsch, whose 1906 essay, ‘On the Psychology of the Uncanny,’ potentially speaks more directly to the ‘machinic uncanny’ of AI than Freud. Jentsch writes that the uncanny is a by-product of an ‘intellectual uncertainty’ wherein one ‘does not know one’s way’ about in the strange territory the uncanny produces. Intellectual uncertainty characterises the present moment as well as any term; no one knows quite what is happening because so much is happening, so often, and so visibly. We live in a kind of “informational uncanny,” which, when one considers the ways in which AI devices are trained—using massive flows of data—one could also argue the AI entities we have created also have the ability to experience things for themselves. An AI device is fed with images or text, then is expected to recognise what is relevant in the information it is presented so as to produce an output based on this very information. The machine, often a neural network of linked nodes (generative adversarial networks or GANs are particularly popular versions of this technology for image production), like Jentsch’s subject experiencing the uncanny, does not start by “knowing its way” in the information it receives; it navigates based on the shifting materials that flow into it. It could be the case that AI images strike so many writers as uncanny because they are an expression of the informational uncanny—produced by and through an uncanny methodology.

Sandra Rodriguez: ‘Chom5ky vs Chomsky,’ (2022), digital rendering, VR application // Photo by NFB/SCHNELLE BUNTE BILDER

One risks getting bogged down in long-standing AI debates about what machines are doing, or, indeed, “feeling,” when they are performing machinic cognition. The notion of machinic artistic subjectivity as being inherently uncanny is perhaps a fruitful way to understand the way the works produced by it are received; it presents a true, unsettled mirror of the moment. Having seen a number of recent exhibitions in Berlin which involve AI, and spoken to several artists working with AI systems in their practice, the embrace of a kind of instability at the heart of AI-produced works seems widespread.

A considerable level of anxiety around the advance of AI is caused by the possibility of a kind of totalitarian framework for human activity. AI will find ineluctable answers to the issues that face the world or the ecosystem, and humans will have to suffer these conclusions and their consequences. Such power dynamics will rely on the “certainty” that super-intelligences could produce. The ostensible “neutrality” of AI systems, powerfully questioned by the writer Ruha Benjamin in her work on race and digital culture, will write out the ambiguities of life. This tendency to seek certainty from AIs was a major theme of Sandra Rodriguez’s ‘Chom5ky vs Chomsky’ (2022) at Berlin’s KINDL. Upon entering a rocky, desert landscape with the aid of virtual reality goggles, visitors can pose questions to an AI rendering of Noam Chomsky, who does its best to conduct an “Ask Me Anything” with the audience. While there is a playful dimension to the work—indeed Rodriguez explicitly emphasises this aspect of the piece—the desire for certainty on the part of human beings is one of the most profound and longstanding features of what it has meant to be human. From the Delphic Oracle to the present, humans seeking certainty have only found its opposite. As Chomsky’s own linguistic programmer recognises, words are inherently equivocal. There is no “true” meaning of any word. Chomsky—the real one—it should be noted, has long been a sceptic of the more radical expectations and assertions of AI theorists. Thus, in seeking answers, the answers push us deeper into the uncanny; meaning drifts away the moment it is asserted.

Sandra Rodriguez: ‘Chom5ky vs Chomsky,’ (2022), digital rendering, VR application // Photo by NFB/SCHNELLE BUNTE BILDER

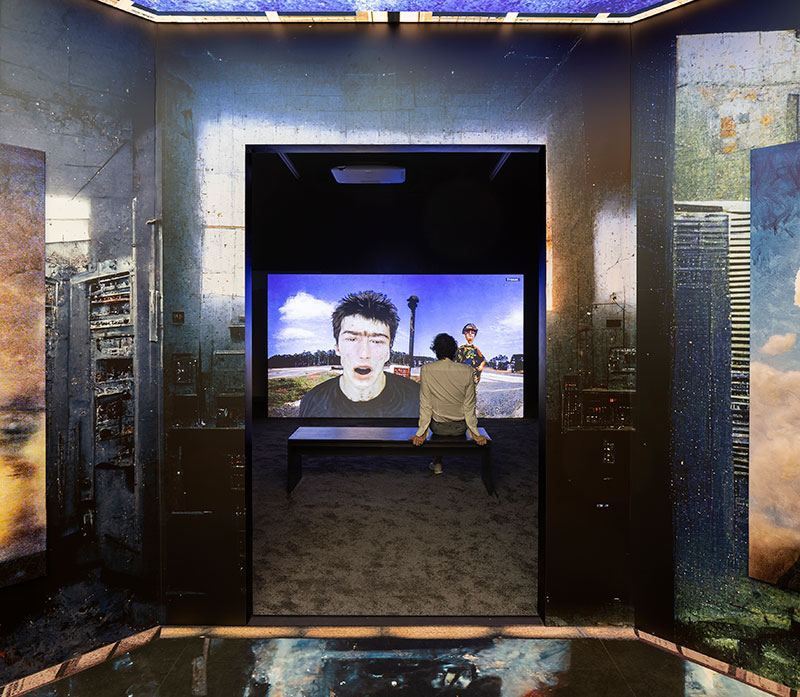

Sandra Rodriguez: ‘Chom5ky vs Chomsky,’ (2022), installation view // Courtesy of NFB/SCHNELLE BUNTE BILDER, photo by Tommi Aittala

This uncertain, constantly shifting and disintegrating ground was also a feature of Jon Rafman’s DALL-E informed show at Sprüth Magers. In Rafman’s works, characters unfold various narratives of lost love, suffering, troubled family dynamics and paranoia about being watched while AI-generated digital images illustrate their tales. Characters emerge and fade away, stories that seem unbelievable become true and then untrue, and then some hybrid of the two in their telling. Over the course of the exhibition, the narratives emphasise the human needs of the narrator, and in doing so reveal a constant theme of such ambitious AI projects: whatever the AI does on our behalf—ultimately what makes its cognition, its “learning” valuable—is what the human wants.

Jon Rafman: ‘Counterfeit Poast,’ 2022, installation view, Sprüth Magers, Berlin // Courtesy of the Artist and Sprüth Magers, Photo by Timo Ohler

While the unknowable and unsettling (indeed, unsettleable) qualities of the uncanny are causes for anxiety in the writings of Jentsch and Freud, many artists working with AI and machinic learning systems find the unknowable a fertile ground for artistic exploration. To not know one’s way can be a very useful artistic methodology. Libby Heaney is an artist whose work has returned to one of the most troubling and uncanny aspects of AI-enabled image production, the deep fake. Heaney’s fakes, in which she has cohabited the bodies of former German Chancellor Angela Merkel, former UK Prime Minister Theresa May and Elvis Presley, are not always particularly deep, but that, for Heaney, is part of the point. Speaking to Heaney in writing this piece she described the tension within the deep fakes as a crucial element of the works’ meanings. In reference to ‘Elvis’ (2019), Heaney says the deep fake was to be understood as “me and Elvis, not just me trying to be Elvis.” The Elvis overlay flickers into greater or lesser fidelity over the course of Heaney’s work; he is never truly there, but also never truly not there. If Elvis has indeed left the building, Heaney seems to ask, where has he gone? AI-generated “stars” are now more popular than some flesh and blood musicians; the idea of Ghost Elvis eternally performing in metaverse Las Vegas is a realistic proposition. This work and others by Heaney, who is trained as a physicist and concerned with notions such as quantum entanglement and particle superposition, use AI-enabled processes not only to question how one finds one’s way about in a space, but what space itself is.

As metaverse and Web3 technologies advance, the boundaries between material space and digital space will become even fuzzier. The notion of “superposition,” which Heaney references, refers to the capacity for a particle to be in two theoretical positions simultaneously—a state of being that could become a common subjective experience. To be in the material world and in digital space is, of course, common enough in an age of live streaming, but the logic of both spaces reduced to the logic of the physical world is not. That is, just because you see someone inside your computer, doesn’t mean they are really in your computer, though this may change in the coming years in part thanks to the uncanny materialities of AI-enabled production. How artists reckon with these dauntingly slippery terrains defies easy discussion, but looking back to Jentsch’s understanding of the uncanny, one may ponder how the uncanny may come to appear to people in the future. Will the “unheimlich,” the “unhomely” in a literal translation from the original German, ultimately become our home?